4 Data normalisation and data aggregation

- Be able to aggregate PSM-level information to protein-level using the

aggregateFeaturesfunction in theQFeaturesinfrastructure - Recognise the importance of log transformation (

logTransform) - Know how to normalise your data (using

normalize) and explore the most appropriate methods for expression proteomics data

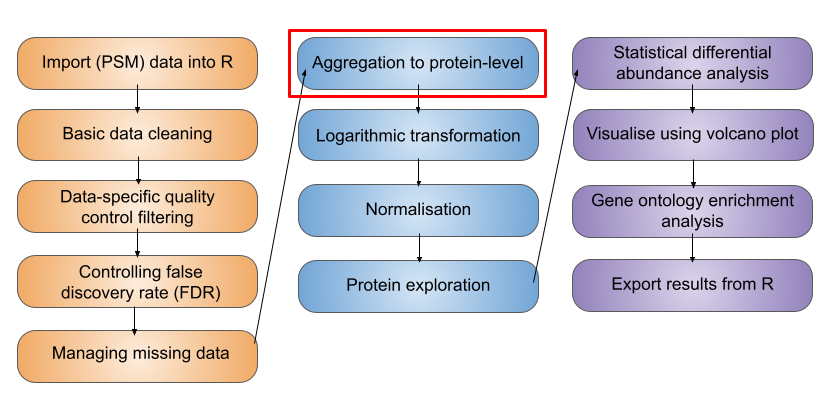

Let’s start by recapping which stage we have reached in the processing of our quantitative proteomics data. In the previous two lessons we have so far learnt,

- how to import our data into R and store it in a

QFeaturesobject - work with the structure of

QFeaturesobjects - clean data using a series of non-specific and data-dependent filters

In this next lesson we will continue processing the PSM level data, aggregate our data to protein-level intensities, explore log transformation, and finally normalise the protein-level data ready for downstream statistical testing.

4.1 Feature aggregation

Let’s recap our data,

cc_qfAn instance of class QFeatures containing 2 set(s):

[1] psms_raw: SummarizedExperiment with 45803 rows and 10 columns

[2] psms_filtered: SummarizedExperiment with 25687 rows and 10 columns Now that we are satisfied with our PSM quality, we need to aggregate our PSM level data upward to the protein level. In a bottom-up MS experiment we initially identify and quantify peptides. Further, each peptide can be identified and quantified on the basis of multiple matched spectra (the peptide spectrum matches, PSMs). We now want to group information from all PSMs that correspond to the same master protein accession.

To aggregate upwards from PSM to proteins we can either do this (i) directly (from PSM straight to protein, if we are not interested in peptide level information) or (ii) include an intermediate step of aggregating from PSM to peptides, and then from the peptide level to proteins. Which you do will depend on your biological question. For the purpose of demonstration, let’s perform the explicit step of PSM to peptide aggregation.

4.1.1 Step 1: Sum aggregation of PSMs to peptides

In your console run the aggregateFeatures function on your QFeatures object. We wish to aggregate from PSM to peptide level so pass the argument i = "psms_filtered" to specify we wish to aggregate the PSM data, and then pass fcol = "Sequence" to specify we wish to group by the peptide amino acid sequence.

cc_qf <- aggregateFeatures(cc_qf,

i = "psms_filtered",

fcol = "Sequence",

name = "peptides",

fun = base::colSums,

na.rm = TRUE)

cc_qfAn instance of class QFeatures containing 3 set(s):

[1] psms_raw: SummarizedExperiment with 45803 rows and 10 columns

[2] psms_filtered: SummarizedExperiment with 25687 rows and 10 columns

[3] peptides: SummarizedExperiment with 17231 rows and 10 columns We see we have created a new assay called peptides and summarised 25687 PSMs into 17231 peptides.

There are many ways in which we can combine the quantitative values from each of the contributing PSMs into a single consensus peptide or protein quantitation. Simple methods for doing this include calculating the peptide or master protein quantitation based on the mean, median or sum PSM quantitation. Although the use of these simple mathematical functions can be effective, using colMeans or colMedians can become difficult for data sets that still contain missing values. Similarly, using colSums can result in protein quantitation values being biased by the presence of missing values.

That said in this case when missing values are not a problem, colSums can provide a beneficial bias towards the more abundant PSMs or peptides, which are also more likely to be accurate. If missing values are present, an alternative would be to use MsCoreUtils::robustSummary, a state-of-the art aggregation method that is able to aggregate effectively even in the presence of missing values (Sticker et al. 2020). See the extended materials for an example of robust summarisation.

4.1.2 Step 2: Sum aggregation of peptides to proteins

Let’s complete our aggregation by now aggregating our peptide level data to protein level data. Let’s again use the aggregateFeatures function and pass fcol = "Master.Protein.Accessions" to specify we wish to group by "Master.Protein.Accessions".

cc_qf <- aggregateFeatures(cc_qf,

i = "peptides",

fcol = "Master.Protein.Accessions",

name = "proteins",

fun = base::colSums,

na.rm = TRUE)

cc_qfAn instance of class QFeatures containing 4 set(s):

[1] psms_raw: SummarizedExperiment with 45803 rows and 10 columns

[2] psms_filtered: SummarizedExperiment with 25687 rows and 10 columns

[3] peptides: SummarizedExperiment with 17231 rows and 10 columns

[4] proteins: SummarizedExperiment with 3823 rows and 10 columns We see we have now created a new assay with 3823 protein groups.

Since we are aggregating all PSMs that are assigned to the same master protein accession, the downstream statistical analysis will be carried out at the level of protein groups. This is important to consider since most people will report “proteins” as displaying significantly different abundances across conditions, when in reality they are referring to protein groups.

4.2 Logarithmic transformation

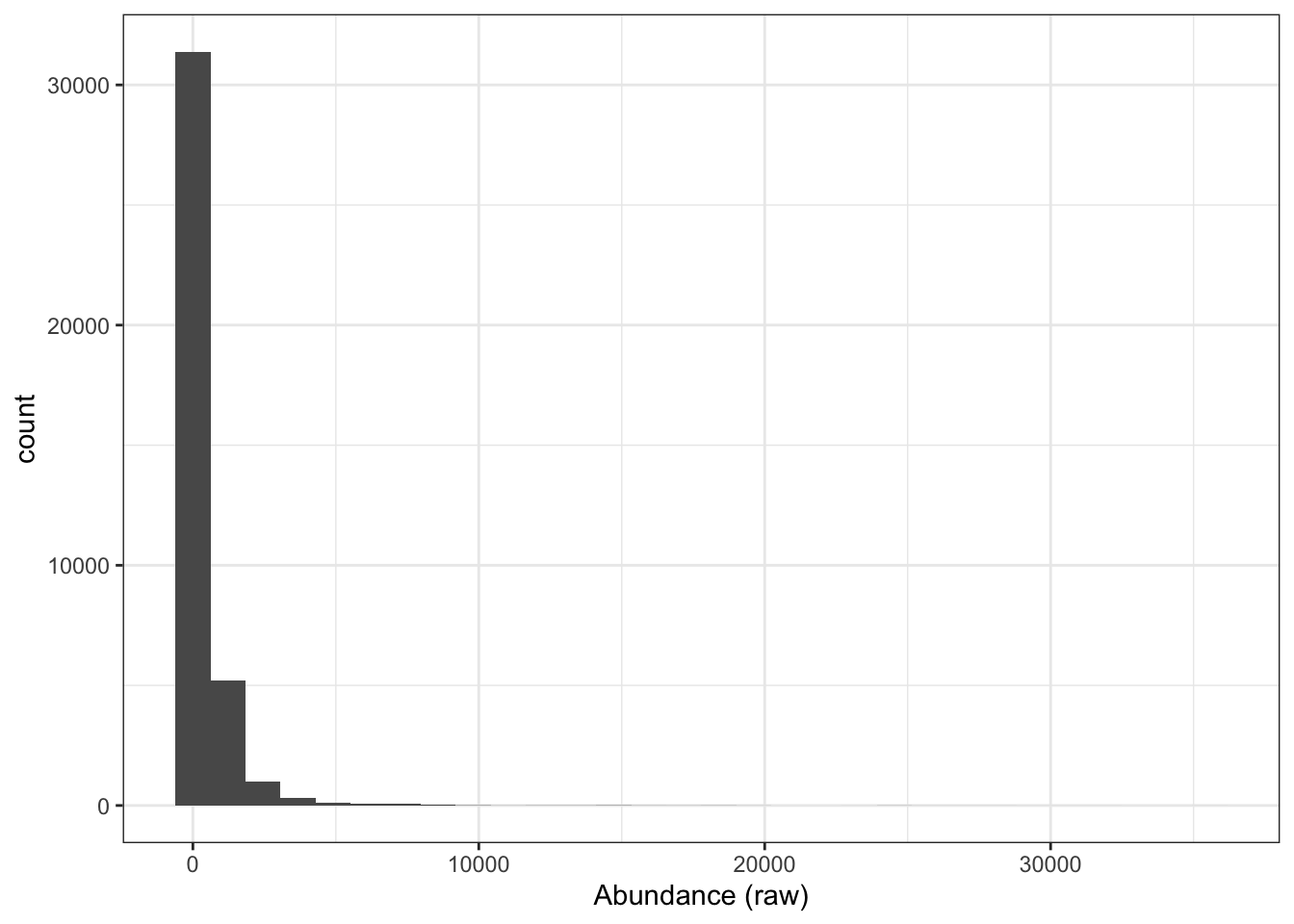

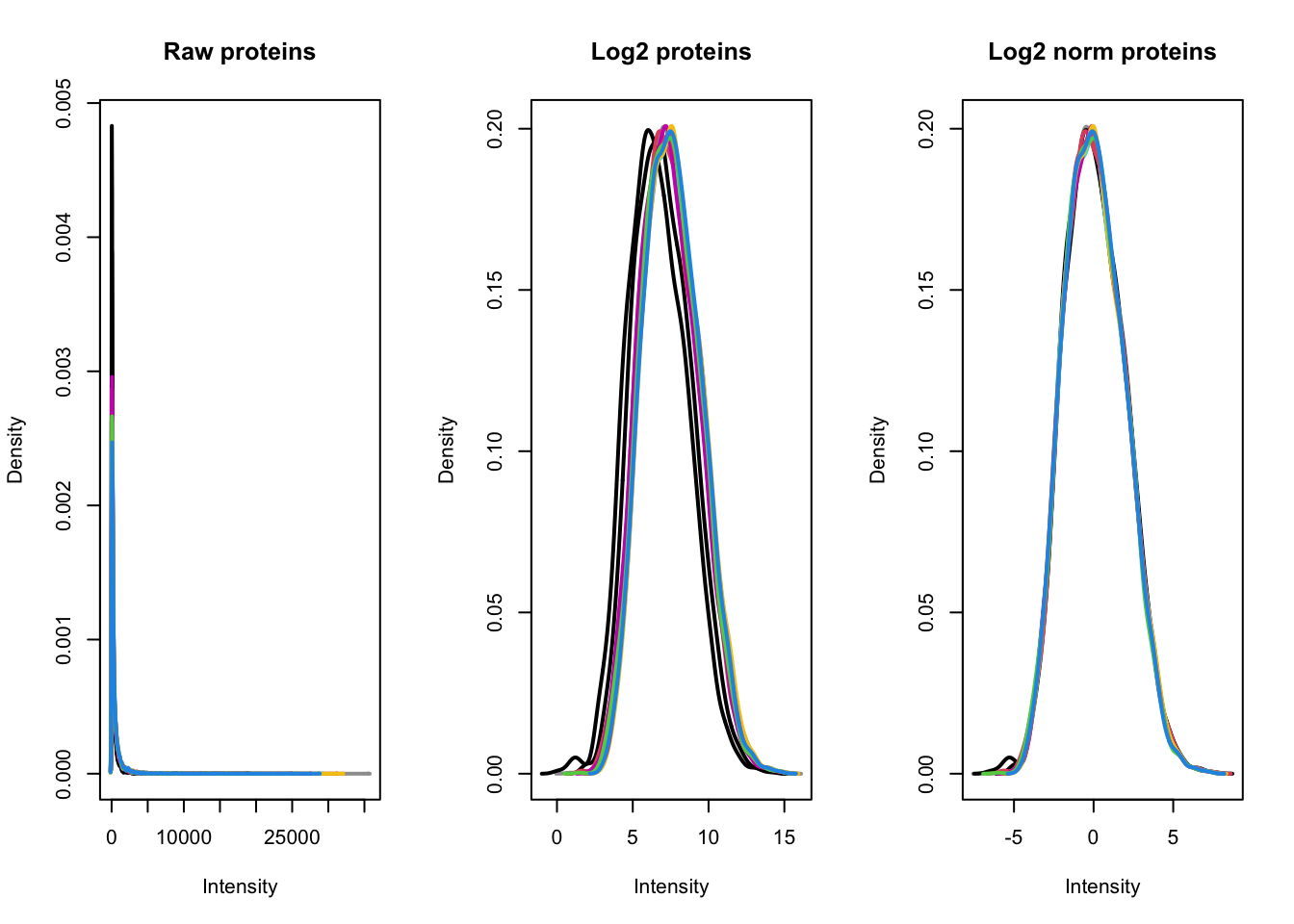

We have now reached the point where we are ready to log2 transform the quantitative data. If we take a look at our current (raw) quantitative data we will see that our abundance values are dramatically skewed towards zero.

## Look at distribution of abundance values in untransformed data

cc_qf[["proteins"]] %>%

assay() %>%

longFormat() %>%

ggplot(aes(x = value)) +

geom_histogram() +

theme_bw() +

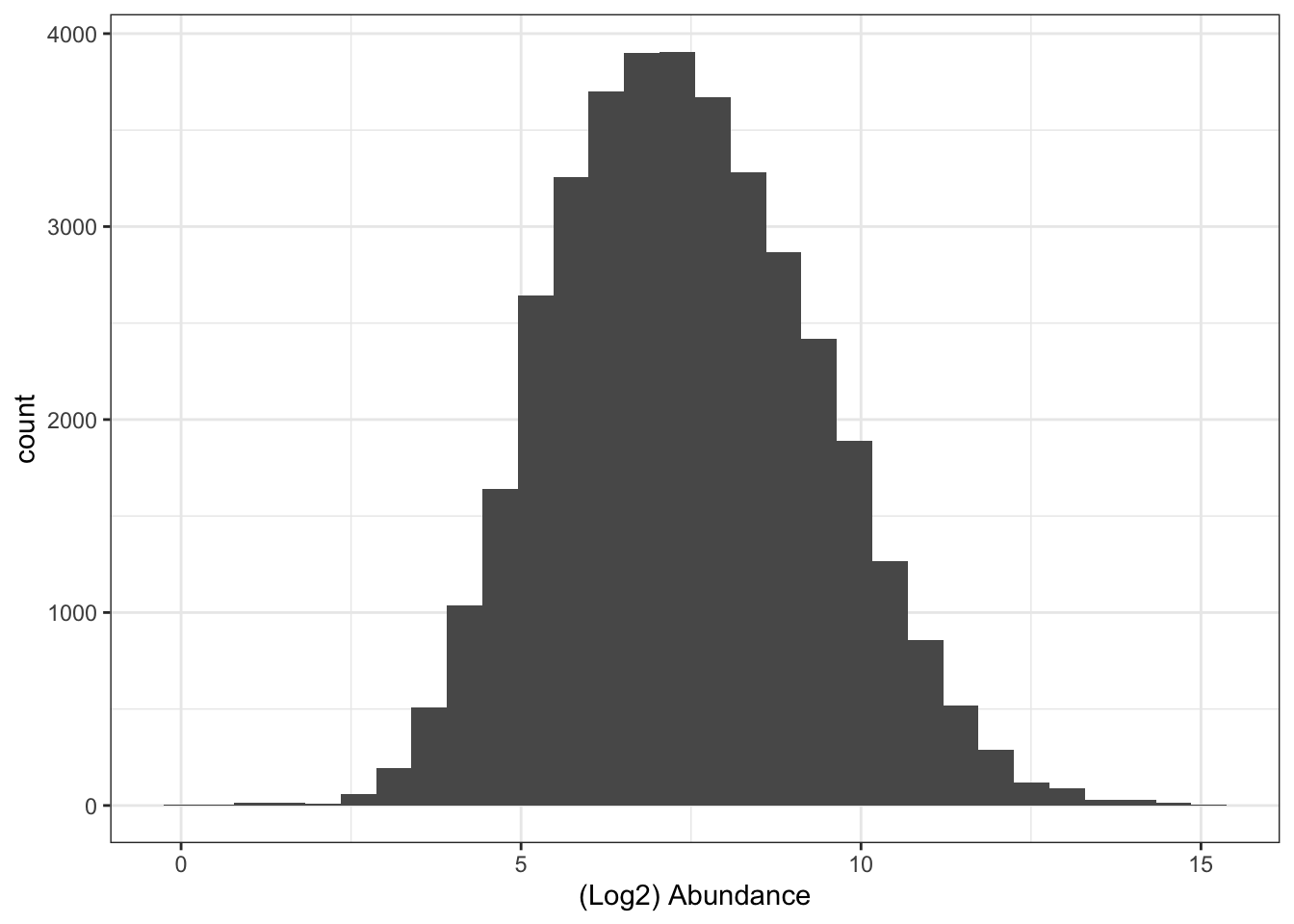

xlab("Abundance (raw)")This is to be expected since the majority of proteins exist at low abundances within the cell and only a few proteins are highly abundant. However, if we leave the quantitative data in a non-Gaussian distribution then we will not be able to apply parametric statistical tests later on. Consider the case where we have a protein with abundance values across three samples A, B and C. If the abundance values were 0.1, 1 and 10, we can tell from just looking at the numbers that the protein is 10-fold more abundant in sample B compared to sample A, and 10-fold more abundant in sample C than sample B. However, even though the fold-changes are equal, the abundance values in A and B are much closer together on a linear scale than those of B and C. A parametric test would not account for this bias and would not consider A and B to be as equally different as B and C. By applying a logarithmic transformation we can convert our skewed asymmetrical data distribution into a symmetrical, Gaussian distribution.

Although there is no mathematical reason for applying a log2 transformation rather than using a higher base such as log10, the log2 scale provides an easy visualisation tool. Any protein that halves in abundance between conditions will have a 0.5 fold change, which translates into a log2 fold change of -1. Any protein that doubles in abundance will have a fold change of 2 and a log2 fold change of +1.

Logarithmic transformation can be applied at any stage of the data processing. This decision will depend upon the other data processing steps being completed and the methods used to do so. For example, many imputation methods work on log transformed data. We have chosen to log transform the data in this example after protein aggregation as we will use the colSums method for summarisation and this method requires the data to not be log transformed.

## Look at distribution of abundance values in untransformed data

cc_qf[["proteins"]] %>%

assay() %>%

longFormat() %>%

ggplot(aes(x = log2(value))) +

geom_histogram() +

theme_bw() +

xlab("(Log2) Abundance")To apply this log2 transformation to our data we use the logTransform function and specify base = 2.

cc_qf <- logTransform(object = cc_qf,

base = 2,

i = "proteins",

name = "log_proteins")Let’s take a look again at our QFeatures object,

cc_qfAn instance of class QFeatures containing 5 set(s):

[1] psms_raw: SummarizedExperiment with 45803 rows and 10 columns

[2] psms_filtered: SummarizedExperiment with 25687 rows and 10 columns

[3] peptides: SummarizedExperiment with 17231 rows and 10 columns

[4] proteins: SummarizedExperiment with 3823 rows and 10 columns

[5] log_proteins: SummarizedExperiment with 3823 rows and 10 columns 4.3 Normalisation of quantitative data

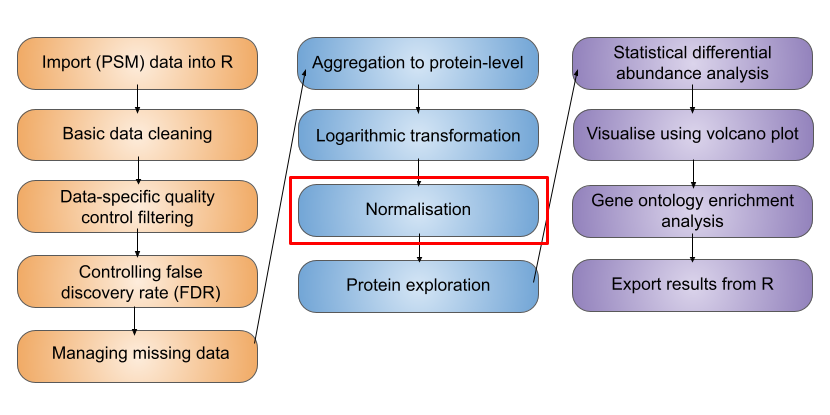

We now have log protein level abundance data to which we could apply a parametric statistical test. However, to perform a statistical test and discover whether any proteins differ in abundance between conditions (here cell cycle stages), we first need to account for non-biological variance that may contribute to any differential abundance. Such variance can arise from experimental error or technical variation, although the latter is much more prominent when dealing with label-free DDA data.

Normalisation is the process by which we account for non-biological variation in protein abundance between samples and attempt to return our quantitative data back to its ‘normal’ condition i.e., representative of how it was in the original biological system. There are various methods that exist to normalise expression proteomics data and it is necessary to consider which of these to apply on a case-by-case basis. Unfortunately, there is not currently a single normalisation method which performs best for all quantitative proteomics datasets.

In QFeatures we can use the normalize function. To see which other normalisation methods are supported within this function, type ?normalize to access the function’s help page.

Of the supported methods, median-based methods work well for most quantitative proteomics data. Unlike using the mean, median-based methods are less sensitive to the outliers which we often have in proteomics datasets. Let’s apply a center median normalisation approach,

cc_qf <- normalize(cc_qf,

i = "log_proteins",

name = "log_norm_proteins",

method = "center.median")Let’s verify the normalisation by viewing the QFeatures object. We can call experiments to view all the assays we have created,

experiments(cc_qf)ExperimentList class object of length 6:

[1] psms_raw: SummarizedExperiment with 45803 rows and 10 columns

[2] psms_filtered: SummarizedExperiment with 25687 rows and 10 columns

[3] peptides: SummarizedExperiment with 17231 rows and 10 columns

[4] proteins: SummarizedExperiment with 3823 rows and 10 columns

[5] log_proteins: SummarizedExperiment with 3823 rows and 10 columns

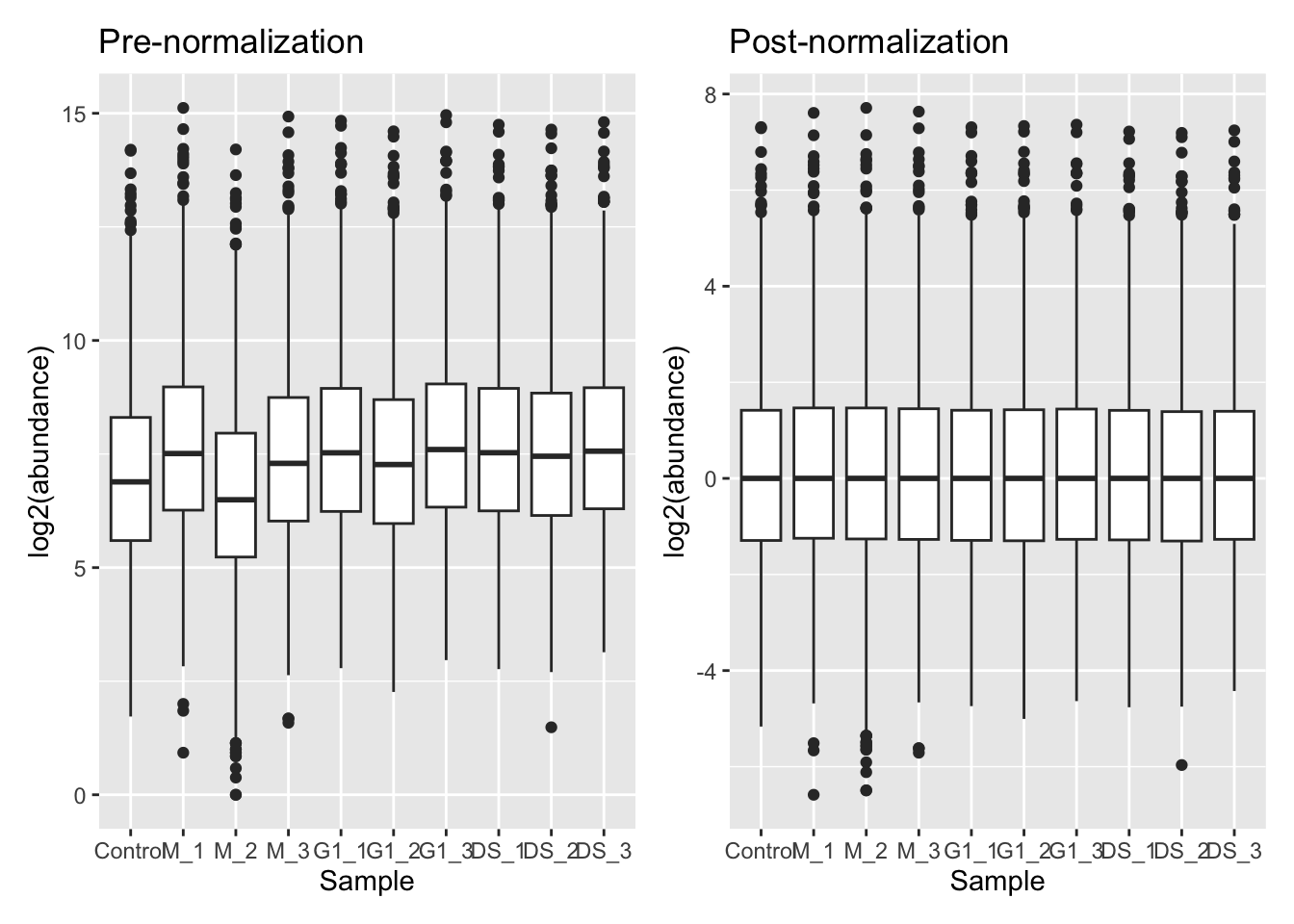

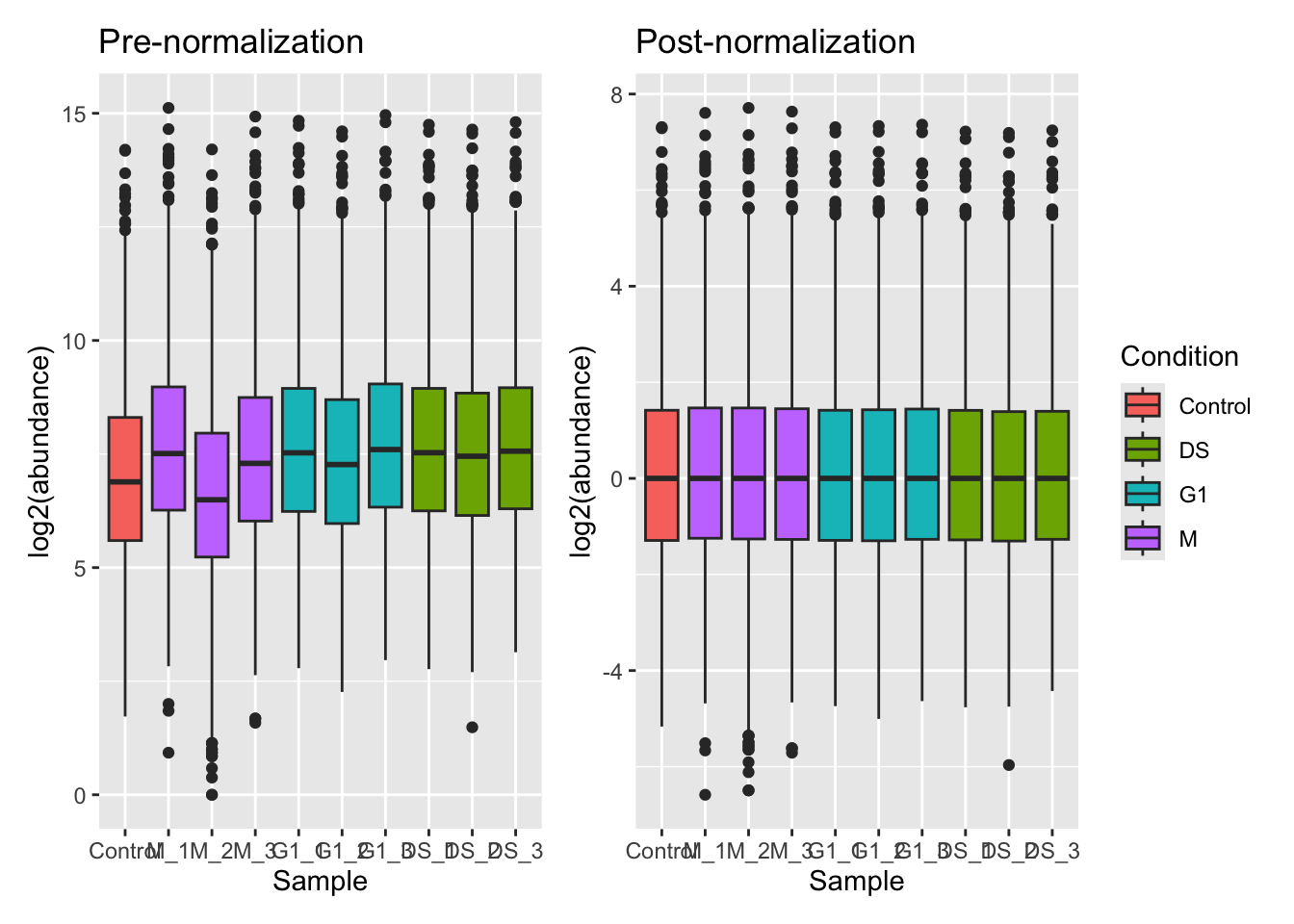

[6] log_norm_proteins: SummarizedExperiment with 3823 rows and 10 columnsTo visualise the effect that log transformation followed by normalisation has had on our data, we can also generate density plots. Density plots allow us to visualise the distribution of quantitative values in our data and to see where the majority of intensities lie.

From our plots we can see that the center median normalisation has shifted the curves according to their median such that all of the final peaks are overlapping. This is what we would expect given that all of our samples come from the same cells and our treatment/conditions don’t case massive changes in the proteome.

To explore the use of alternative normalisation strategies, the NormalyzerDE Willforss, Chawade, and Levander (2018) package can be used to compare normalisation approaches. Please refer to the Using NormalyzerDE to explore normalisation methods section.

- Aggregation from lower level data (e.g., PSM) to high level identification and quantification (e.g., protein) is achieved using the

aggregateFeaturesfunction, which also creates explicit links between the original and newly createdassays. - Expression proteomics data should be log2 transformed to generate a Gaussian distribution which is suitable for parametric statistical testing. This is done using the

logTransformfunction. - To remove non-biological variation, data normalisation should be completed using the

normalizefunction. To help users decide which normalisation method is appropriate for their data we recommend using thenormalyzerfunction to create a report containing a comparison of methods.